If you haven’t read Part 1 yet, I suggest you do so first.

Seeing only what’s relevant

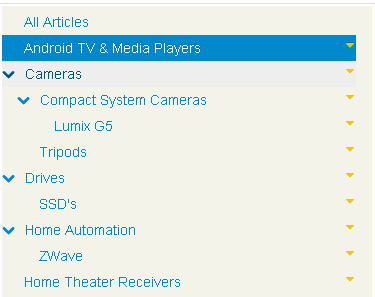

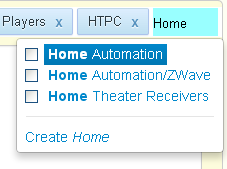

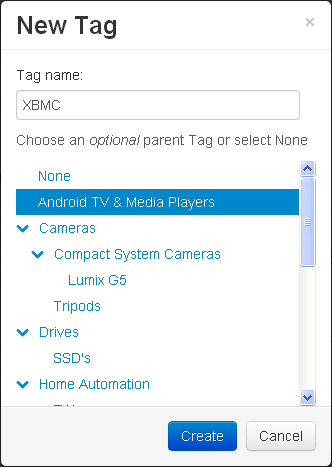

As you create more and more Notes it’s easy to get overwhelmed – you can’t see the wood for the tree’s. Filters remove the clutter, so you see only the notes that matter, for the task at hand.

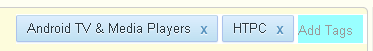

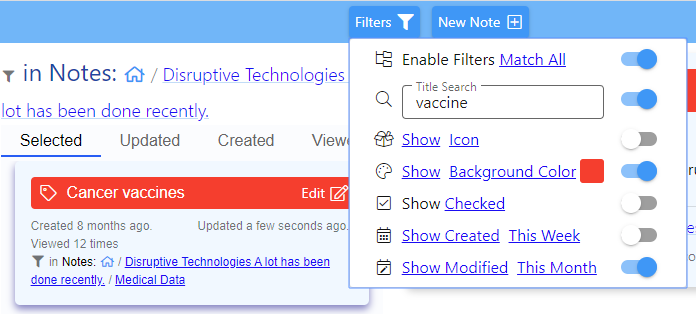

For example let’s say you want to see only the notes updated in the last month, with a title color of red and whose title includes vaccine.

This screenshot shows how easy this is.

Title Search does a live fuzzy search on note titles. A tap on Show toggles each filter between show and hide. Show will only show notes that match the criteria, whereas Hide will hide them. Tap on Icon etc. lets you pick specific items from a menu. The switch to the right of each filter either enables or disables that filter.

Finally there is the Match All / Match Any toggle. Match All means notes must match all enabled filters, whereas Match Any means include notes that match any of the enabled filters. This has no effect if only a single filter is enabled.

For a more exhaustive demonstration click on the image below.

As Filters change, the Tree and Notes grid update to show only the notes that match the filter. Tree items which are filtered out but need to be displayed because they are ancestors of matching notes are displayed dimmed to identify this.

Keeping everything synchronized

The ability to update all copies of Clibu Notes as you add, edit and rearrange them, both efficiently and in real time, has been a major undertaking and is a must have capability.

Pictures speak a thousand words, so without further ado.

Click on the image to enlarge.

This shows Clibu Notes open in two Browser Tabs, positioned one above the other.

Editing is occuring in the top tab with the bottom tab updated in real time to match. Of course automatic content synchronization isn’t restricted to Tabs in the same Browser (except in this release).

Clibu Notes instances in any Browser on any device, anywhere, will be updated in exactly the same way, using exactly the same Clibu notes synchronization engine you see working above.

Taking this further you can continue using Clibu Notes even when you don’t have an Internet connection, including full editing, adding new notes, rearranging the notes tree etc. Then when you are next online, your changes and other online users changes will be synchronized with each other.

The ability to seamlessly access and work on your notes both offline and online is important to many of you and positions Clibu Notes at a level above similar applications.

In Conclusion

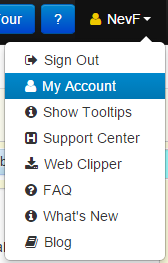

The About page in Clibu Notes includes a roadmap I suggest you read. About can be accessed via Help or the Settings menu in Clibu Notes

To be added to the evaluation list and get immediate access to Clibu Notes v0.42.00 simply email info@clibu.com

You can also follow us on Twitter.

As always we look forward to your suggestions and guidance.

You can add comments below and open tickets in our Help desk, accessed via. the Settings menu in Clibu Notes. Or get in touch via email if you prefer.

Neville Franks, Author of Clibu Notes, Clibu etc. ©Soft As It Gets P/L 2020